Mastering the Amazon A10 Algorithm – Or is it A9 still?

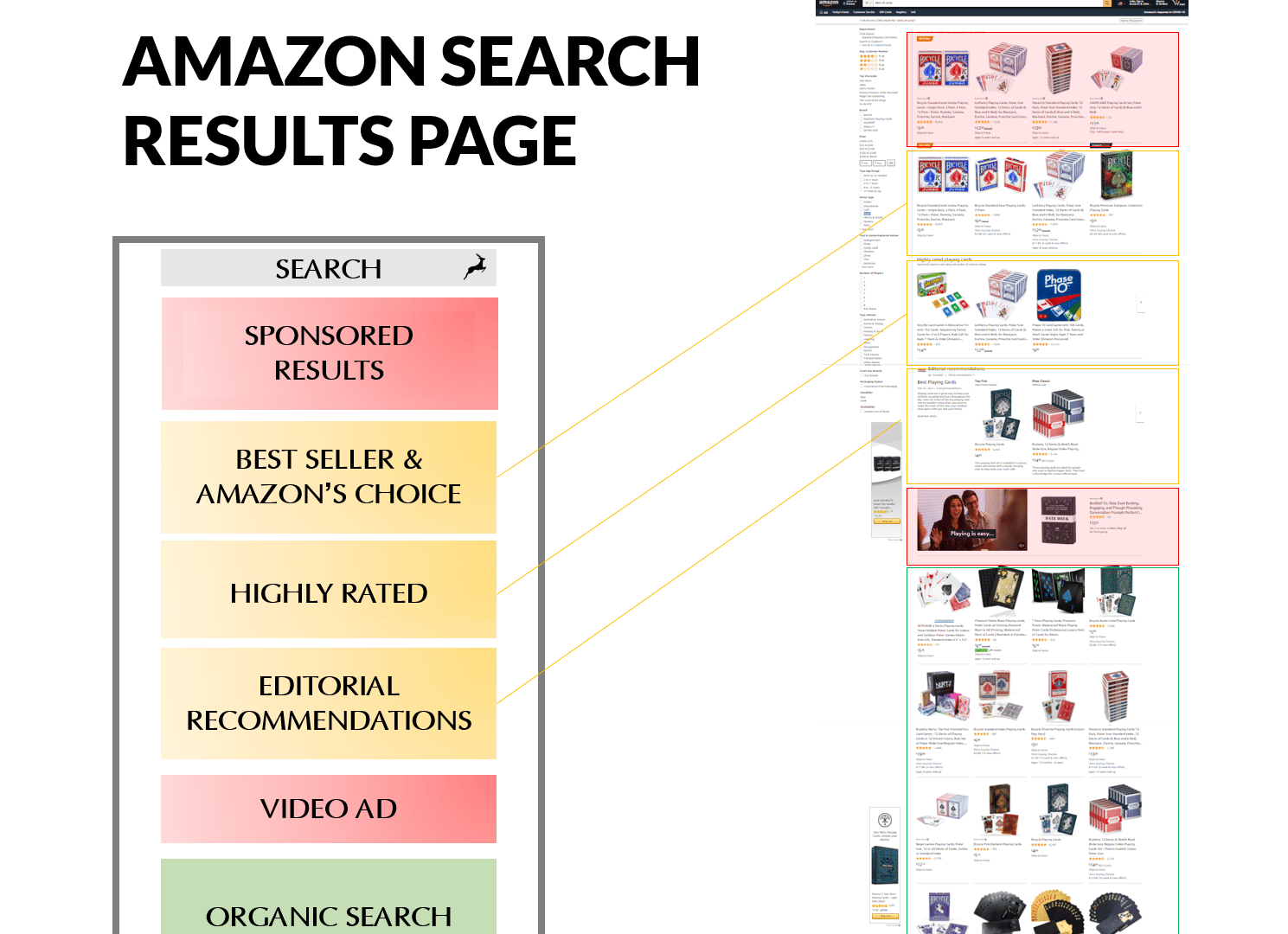

Wouldn't it be fantastic to know exactly what Amazon's A10 algorithm requires for a product to rank at the top of search results when a user searches for a product? That would surely propel your Learn more